Workflow of a Machine Learning Project

What are we dealing with?

|

| ... Exactly |

#1 You really got to identify your problem.

This is the first and an important step in process of making a Machine Learning Project. Doesn't matter if it's of a supervised or unsupervised category, the gist is that knowing the problem sets the base of your following steps.

Let's just assume you don't know your problem ...

Me: Take this data-set.

You: Okay. What's it about?

Me: ...

You: Is there any dependent feature in here?

Me: ....

You: Uhm ... So do you want me to find patterns in this?

Me: ...

You don't want to fell in this trap. That's why you must set a base for yourself to build your project upon.

Data

Do you even have it?

|

| You might wanna check that |

After identifying your problem, you must look for data-sets, unless of course you have your custom data set you got by surveying.

#2 Have the right data-set which suits your problem.

There are numerous data-sets present on the internet and you can use one of them which might serve your purpose. The key is having a close look at features present in your data-set. Sometimes your data-set might be missing key features that might affect your predictions or prove a missing factor in finding patterns. Sometimes the data doesn't have enough number of observations. So these are few of the points that one should keep in mind before settling on a data-set.

Popular resources where you can search for data-sets include UCI and Kaggle, which contain loads of *free* data-sets that you can use. You might also wanna have a look at a new tool, recently released by google called the Dataset Search.

Why? Maybe because observations about any particular feature for a particular entity might have missed out. Maybe because someone went out of the box to do something aka Outliers. Or maybe because some of the observations overlap in terms of dependency.

These are the common problems that have to be dealt with before plugging it into a model. And with the common problems, comes the common methods which are applied on the data-sets.

- One easy way to handle an entity with missing data is to ignore the entity itself. This, of course comes with risks that they reduce the number of observations and some of them might be primarily affecting your results and you don't want to miss out on that.

- Other common methods include Standardization, Normalization and Scaling which might be of different types which can be applied on different data-sets with different types of noise.

A good read about pre-processing can be this blog from Sudharsan Asaithambi.

One thing that should be highlighted about #2 and #3 is that some people might find it boring and and would even prefer to skip some pre-processing parts. One short answer to that problem would be that we don't have any choice. If these steps are not done correctly and not given a major portion of their time and attention, results won't be fruitful and that is the last thing you would like after making a good model for your problem.

Try to put it in either of them. (Of course, if your problem doesn't come under a totally different category of Machine Learning - Reinforcement Learning)

That's Supervised Learning.

Now we've got two broad categories of problems that might come under this - Regression and Classification.

Prepare your Data

Once you have your data, now you can start thinking about different techniques which you might think will solve your problem. But before that, there is this one last step you need to perform in order to get your data-set perfectly ready for next stage.#3 Preprocessing

Even though you've a good data-set with enough features that will solve your problem, your data-set might still be dirty, which is most of the real-world data actually is.Why? Maybe because observations about any particular feature for a particular entity might have missed out. Maybe because someone went out of the box to do something aka Outliers. Or maybe because some of the observations overlap in terms of dependency.

These are the common problems that have to be dealt with before plugging it into a model. And with the common problems, comes the common methods which are applied on the data-sets.

- One easy way to handle an entity with missing data is to ignore the entity itself. This, of course comes with risks that they reduce the number of observations and some of them might be primarily affecting your results and you don't want to miss out on that.

- Other common methods include Standardization, Normalization and Scaling which might be of different types which can be applied on different data-sets with different types of noise.

A good read about pre-processing can be this blog from Sudharsan Asaithambi.

One thing that should be highlighted about #2 and #3 is that some people might find it boring and and would even prefer to skip some pre-processing parts. One short answer to that problem would be that we don't have any choice. If these steps are not done correctly and not given a major portion of their time and attention, results won't be fruitful and that is the last thing you would like after making a good model for your problem.

Have Fun!

Now that you've got your data perfectly ready to be put into analysis, have fun!#4 Modelling

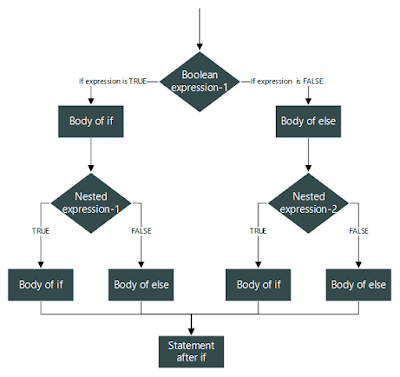

The two broad categories Machine Learning is usually classified into: Supervised Learning and Unsupervised Learning.Try to put it in either of them. (Of course, if your problem doesn't come under a totally different category of Machine Learning - Reinforcement Learning)

Supervised Learning

You've got various questions *Independent Variables*. You've got answers *Dependent Variables* to them as well. You want to find the answers to some new questions *Test Data* that might be similar to the questions *Training Data* you already have answers of.That's Supervised Learning.

Now we've got two broad categories of problems that might come under this - Regression and Classification.

- Regression: You've got observations that is numeric or that are in form of some series, you've got their specific outputs which are in same format. You want the outputs to new observations. If you've got a problem like that, your problem may be solved through regression.

|

| Polynomial Regression - Wikipedia |

Technically, there are several regression algorithms you can apply depending on your data-set, some of which include Linear-Regression in one or multiple variables or Polynomial Regression.

- Classification: You've got data about something and the data is categorical that is the whole data can be divided into different categories. You have some observations and know in which category they fell into. Based on those observations, you want to know in which category, new observation might fell. These kinds of problem come under classification.

|

| KNN - Wikipedia |

Algorithms under this can be many ranging from a simple K-Nearest Neighbors algorithm to Ensemble Methods and various Deep Learning Models.

Unsupervised Learning

You've got data about some field. You just want to find patterns in the data and look if the data can be categorical or not.

|

| Clustering - Wikipedia |

Clustering: The methods work to find the patterns in the data and see where there can be some *Clusters* of similar data. This similar clusters of data can then be used to categorize them and extract features that might be responsible for grouping them and then the features can be used in the real world.

K-Means Clustering uses a popular algorithm which is used find clusters in data.

Feed your Eyes

The next step really pulls a lot of information out of the model and might even reveal some information that might help to make your model better.

#5 Visual Insights and Tuning of Parameters

Visualizing can be in terms of charts or different graphs. Different tools and APIs are available in every language that supports development of Machine Learning models. As long as your data is two or three dimensions, you can plot it.

There is often a separate validation data-set kept reserved to test our trained model on tune parameters of our model according to that. The parameters are actually some constant values associated with our model that defines its behavior.

After having a clear view of some features against the other or against the output, one can have an idea how to tune in the parameters of the model and make it better. This method guides us in choosing the appropriate parameters and doesn't leave us in vagueness. Besides this part, it also helps in presentation which is discussed in the following section.

A good short read can be this article.

Presentation

Perhaps, the most important and most overlooked part of the whole project journey.

#6 Present your findings beautifully

|

| *Classic Presentation Pic* - thenextweb |

Conveying your results proves to be a major factor. Your results might be impressive but sometimes they are not in the same format or same structure as your client or viewer wants. So you must be able to present in some format to which they can relate their problem to and actually do some further actions based on the results of the model, which is what the final goal of any model is - to improve the current situation or develop something new based on past experiences.

Comments

Post a Comment