Decision Trees

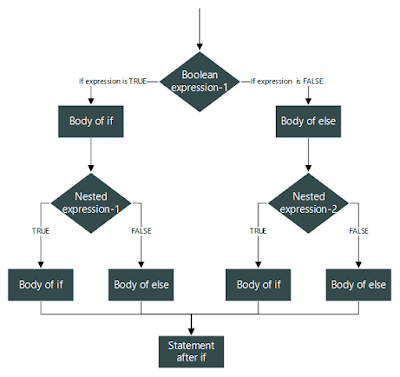

Ever implemented if-else?

Well, if you have, you've probably implemented a Decision Tree.

No kidding.

Let's dive into it.

Decision Tree is a popular tool used in many Machine Learning models which help to separate the data into different categories by sketching out linear boundaries in the sample space and classify by asking questions in a highly optimized way.

Don't worry ... Just hang on

Can you separate the circles from crosses by drawing a vertical or a horizontal line?

Of course you can. A vertical line just after the 2 marked on horizontal axis. Or maybe a horizontal line right through the center.

Some might have also answered a diagonal line, one end touching top left corner, but again, look what I asked for.

Can you separate this data with a horizontal or vertical line?

|

| Image: Udacity |

Nope. You can't.

Did you think of this line?

|

| Image: Udacity |

Well this does tell you that left of the line are all the crosses, but you really can't tell what's to the right of the line.

Now, can you separate the same data with two vertical or horizontal lines?

|

| Image: Udacity |

This would be my answer.

You see that's what Decision Trees do.

Still not clear? Let's dive a bit deeper.

Decision Trees try to take in the data and try to plot vertical or horizontal lines to separate the different classes of data.

How does it do that?

There can be various factors considering which a Decision Tree model puts a linear boundary, but the gist is it tries to find the feature along which if we split, it will give maximum number of observation points their correct area of classification.

For example the last case we discussed, among x (horizontal) and y (vertical), the model chose to split on x-axis, which indeed gave a line that clearly separated out most out of the red points, and was able to give a definite result on one of its sides, whereas at any point along y-axis we can't put a line which will give is clear results.

How does the model chose which feature to select?

There is this thing called entropy.

In thermodynamics, entropy is described as the measure of randomness. So you might expect randomness to associate with randomness of data and less the randomness of data in dispersion, more easy it will be classify. Close.

So what entropy, in our case, actually is?

It is the average number of yes/no questions we need to ask to guess in which class a particular point falls.

Can entropy be in fractions?

Yes, of course, it's average.

Let's take some cases.

For example the last case we discussed, among x (horizontal) and y (vertical), the model chose to split on x-axis, which indeed gave a line that clearly separated out most out of the red points, and was able to give a definite result on one of its sides, whereas at any point along y-axis we can't put a line which will give is clear results.

How does the model chose which feature to select?

There is this thing called entropy.

In thermodynamics, entropy is described as the measure of randomness. So you might expect randomness to associate with randomness of data and less the randomness of data in dispersion, more easy it will be classify. Close.

So what entropy, in our case, actually is?

It is the average number of yes/no questions we need to ask to guess in which class a particular point falls.

Can entropy be in fractions?

Yes, of course, it's average.

Let's take some cases.

How many questions do you need to ask to tell if it's color if you pull out one random ball from here?

Zero? Correct.

That's the entropy here.

So in the actual data classification too, you'll be needing 0 splits.

Same question. Different Case.

Let's just break it up a bit.

Probability(Blue), P(B) = 1/3

Probability(Yellow), P(Y) = 2/3

Number of questions needed to ask if it's yellow, N(Y) = 1

Similarly, N(B) = 1

So Entropy is calculated as P(B)*N(B) + P(Y)*N(Y)

= 1/3 * 1 + 2/3 * 1

= 1

Let's take a complicated one.

This is an example from a lecture by Luis Serrano.

How many questions do you need to ask to guess what letter is pulled out?

Probabilities.

One possible series of questions can be represented like this.

This way, for every, letter minimum number of questions asked would be 2.

Calculating ...

Seems, at least 2 questions must be asked in order to know the result.

Any other solution possible ... !?

Yes.

Take this approach.

If you look at it closely, you can see if the letter was A, you would've only required one question. But if it was C or D, you would've required 3 questions. So it looks like we've gained as well as lost something.

Let's analyze the trade-off.

Plugging in the probabilities ...

Seems like we're in a better position.

And indeed we are.

This way we got a lower number of average questions asked, which is indeed our entropy.

So you see entropy tries to find out most optimized way of asking the questions to get the data segregated.

In Decision Trees you saw, the algorithm tries to select a feature and put a threshold at some point along it and divides data into two halves according to that.

And in the last few examples, you saw how entropy calculation somewhat structures into an if-else type model. And at every if-else point, we indeed get divided into two parts.

Information Gain.

This is what combines the two.

At this point, I would really recommend you to watch this clip, which explains this concept pretty well *with formula*.

The gist is that Information Gain tries to extract how much information will be gained about each feature if we make it split further. And this calculated using entropy of that feature.

*The Decision Tree Algorithms tries to maximize this.*

Based on this, Decision trees decide which feature to split further and makes best split based on the entropy of that feature.

*Some visualizations to ponder over*

Both of them are visualizations of Decision Tree Models trained on same data.

Spotted any Difference?

- One has too many boundaries all over the place, one has less boundaries.

- One tries to cover all the points, one just leaves some outliers.

Which one is better?

Depends on your data.

What was the difference in training.

I just told the training model that if at any point in decision tree you find 30 *a random number* points in one part, don't make any effort to divide them further, that is 30 points together must have enough similarity to be in one class in my data.

Similarly, you can provide many other restrictions and outputs to the training model in order to control the model to behave according to you. This is in fact the tuning of parameters all about.

Hope you understood the concept of Decision Trees and a little bit of math behind it.

So, now go and build a strong if-else model ;)

Let's analyze the trade-off.

Plugging in the probabilities ...

Seems like we're in a better position.

And indeed we are.

This way we got a lower number of average questions asked, which is indeed our entropy.

So you see entropy tries to find out most optimized way of asking the questions to get the data segregated.

Wrapping it up ...

Till now, you understood what entropy is and how it's calculated. Just a small step remains to show you how it relates to decision trees.In Decision Trees you saw, the algorithm tries to select a feature and put a threshold at some point along it and divides data into two halves according to that.

And in the last few examples, you saw how entropy calculation somewhat structures into an if-else type model. And at every if-else point, we indeed get divided into two parts.

Information Gain.

This is what combines the two.

At this point, I would really recommend you to watch this clip, which explains this concept pretty well *with formula*.

|

| ** Math Guy ** |

The gist is that Information Gain tries to extract how much information will be gained about each feature if we make it split further. And this calculated using entropy of that feature.

*The Decision Tree Algorithms tries to maximize this.*

Based on this, Decision trees decide which feature to split further and makes best split based on the entropy of that feature.

*Some visualizations to ponder over*

|

| Linear Boundaries marked all over the space. |

|

Spotted any Difference?

- One has too many boundaries all over the place, one has less boundaries.

- One tries to cover all the points, one just leaves some outliers.

Which one is better?

Depends on your data.

What was the difference in training.

I just told the training model that if at any point in decision tree you find 30 *a random number* points in one part, don't make any effort to divide them further, that is 30 points together must have enough similarity to be in one class in my data.

Similarly, you can provide many other restrictions and outputs to the training model in order to control the model to behave according to you. This is in fact the tuning of parameters all about.

Hope you understood the concept of Decision Trees and a little bit of math behind it.

So, now go and build a strong if-else model ;)

Comments

Post a Comment